In a significant development within the field of artificial intelligence, ByteDance has announced the launch of Seed1.5-VL, a new vision-language foundation model tailored for enhanced multimodal understanding and reasoning. This innovative model aims to bridge the gap between visual and textual data, enabling more sophisticated interpretation and interaction across diverse applications. By harnessing advanced algorithms and extensive training datasets, Seed1.5-VL seeks to facilitate a deeper comprehension of context and meaning in a variety of scenarios, paving the way for advancements in AI-driven technologies. This article delves into the features, capabilities, and potential implications of Seed1.5-VL in the evolving landscape of multimodal AI systems.

Table of Contents

- Introduction to Seed1.5-VL and ByteDance’s Vision for Multimodal Models

- Key Features of Seed1.5-VL and Its Technological Advancements

- The Role of Vision-Language Models in Modern AI Applications

- Comparative Analysis of Seed1.5-VL with Existing Multimodal Models

- Potential Use Cases for Seed1.5-VL Across Different Industries

- Challenges in Developing and Implementing Vision-Language Models

- Evaluation Metrics for Assessing Multimodal Understanding and Reasoning

- Recommendations for Integrating Seed1.5-VL into Existing AI Infrastructure

- The Importance of Multimodal Training Datasets in Enhancing Model Performance

- Future Directions for Vision-Language Research Beyond Seed1.5-VL

- Ethical Considerations in the Deployment of Advanced AI Models

- User Perspectives and Community Feedback on Seed1.5-VL

- Conclusion: The Impact of Seed1.5-VL on the Future of Multimodal AI

- Q&A

- Insights and Conclusions

Introduction to Seed1.5-VL and ByteDance’s Vision for Multimodal Models

ByteDance’s introduction of Seed1.5-VL signals a pivotal moment in the evolution of multimodal AI systems. This model is designed not just to analyze text or images in isolation but to enhance our understanding of how these modes interact, potentially revolutionizing the way we engage with content across platforms. The impact of such advancements cannot be overstated; they resonate through various sectors—from educational tools that can adapt based on user questions to sophisticated content creation that tailors media to the audience in real time. For instance, consider a virtual assistant that not only processes a user’s spoken queries but also interprets visual inputs, such as images or video content, thereby providing a more holistic understanding of complex requests.

In practical terms, Seed1.5-VL leverages cutting-edge techniques in neural network architecture, allowing for nuanced reasoning and contextual understanding. It acts akin to a multilingual translator that speaks not just in words but in images, sounds, and even actions. This model stands at the juncture of stunning potential and daunting challenges, such as biases in training data and interpretability issues. In today’s rapidly evolving landscape, where AI expands its reach into entertainment, education, and beyond, the value of a robust multimodal framework becomes crucial. Take, for instance, social media analytics tools that can assess both sentiment in text and visual imagery; they can offer brands deeper insights into audience engagement, leading to more informed marketing strategies. Thus, as Seed1.5-VL unfolds, it paves the way for a future where our interactions with technology are richer, more integrated, and ultimately more human-like.

Key Features of Seed1.5-VL

| Feature | Description |

|---|---|

| Multimodal Integration | Seamlessly processes text, images, and sounds to produce coherent outputs. |

| Enhanced Reasoning | Facilitates complex decision-making and contextual responses. |

| User Adaptability | Learns from user interactions to improve personalized experiences. |

Key Features of Seed1.5-VL and Its Technological Advancements

Seed1.5-VL showcases an impressive array of features designed to push the boundaries of machine comprehension and reasoning in a multimodal context. At the heart of this model lies its deep integration of vision and language—an advancement that allows systems to not only interpret textual data but to relate visual stimuli to semantic reasoning seamlessly. This means that, unlike traditional models that struggle with context-switching between text and imagery, Seed1.5-VL enables a more holistic understanding. Think of it as watching a movie while reading a script; now imagine if the character’s dialogues could be analyzed in real-time with the visual context of their expressions and surroundings. This synergistic capability is poised to revolutionize sectors such as educational technology, where personalized learning can be enhanced through visual aids tailored to individual learners’ narratives.

Furthermore, Seed1.5-VL employs cutting-edge advancements in attention mechanisms that allow it to discern not just the relationships between words and images but also their contextual relevance over disparate datasets. This feature mirrors the brain’s ability to focus on relevant details while filtering out distractions—a significant enhancement for applications in customer support automation and content creation, where nuanced understanding is key. To illustrate, consider a customer query about a product. With traditional models, the interaction may require back-and-forth clarifications, but with the nuanced understanding Seed1.5-VL offers, the system can infer intentions and respond proactively, cutting down response time and increasing user satisfaction. As industries transition into this new era of AI-driven senses, we are witnessing a paradigm shift that not only enhances productivity but also reshapes the narrative around human-computer interaction, ushering in smarter, more intuitive platforms that genuinely understand what users need.

The Role of Vision-Language Models in Modern AI Applications

As we delve into the fascinating domain of vision-language models, it’s essential to appreciate how these technologies are redefining multmodal understanding in artificial intelligence. The introduction of models like Seed1.5-VL by ByteDance exemplifies a significant leap in how machines can interpret and integrate visual and textual information. In essence, these models function similarly to how humans associate images with concepts and words, enabling AI to perform tasks that require multifaceted reasoning. Imagine an intelligent assistant that not only processes your commands but also contextualizes them—understanding not just the “what” but the “why” behind an image or a caption. This capability opens the door to various applications, including advanced content moderation, personalized recommendations, and enhanced accessibility tools. Such functionality isn’t just a novelty—it’s a necessity as digital ecosystems become increasingly complex and interdependent.

From a broader perspective, the implications of advancements in vision-language models extend beyond mere AI novelty; they reverberate across industries such as education, healthcare, and entertainment. In education, think about how Seed1.5-VL can facilitate interactive learning by personalizing resources based on a student’s comprehension—an image with an accompanying narrative tailored to enhance cognitive engagement. Similarly, in healthcare, these models could be pivotal in diagnosing conditions by analyzing medical imaging alongside patient reports, effectively transforming the dynamics of patient care. As we continue to grapple with ethical considerations and regulatory frameworks surrounding AI technology, it’s crucial to reflect on quotes from industry leaders: “AI should enhance the human experience, not replace it.” This sentiment not only fosters innovation but also encourages a meaningful dialogue about the societal impacts of such transformative technologies. By marrying cutting-edge advancements with a sense of responsibility, we can envision an AI landscape that truly enriches our lives.

Comparative Analysis of Seed1.5-VL with Existing Multimodal Models

When diving into the nuances of Seed1.5-VL, it becomes apparent that this model distinguishes itself not merely through its multimodal capabilities but also in its architectural advancements. Traditional models like CLIP and ALIGN paved the way by merging vision and language through simpler cross-attention mechanisms, yet they often struggle with nuanced understanding and contextual reasoning. Seed1.5-VL appears to leapfrog limitations by integrating a more dynamic embedding space that adjusts according to the complexity of the input data. This adaptability can be likened to a seasoned interpreter who, upon hearing a word in a different dialect, immediately recalibrates to grasp the original intent. This advancement is crucial not only for improving machine understanding but also for enhancing tasks like visual question answering (VQA) and image captioning, where context is king.

From a practical standpoint, the implications of Seed1.5-VL broaden our understanding of potential applications across various sectors. For instance, consider e-commerce, where visual search functionalities may soon exceed mere product recognition; they could entail sophisticated reasoning about user preferences and contextual shopping habits. By leveraging Seed1.5-VL, platforms could personalize user experiences in a way that feels intuitive and engaging. Furthermore, as AI-driven models gain footholds in healthcare, the nuanced interpretation capabilities could streamline medical imaging analyses, allowing practitioners to harness the inference of visual data combined with patient history efficiently. This is particularly pertinent as we witness increasing regulatory scrutiny surrounding AI deployment across sensitive domains – the conversational creativity and adaptive reasoning demonstrated by Seed1.5-VL may offer new pathways for compliance and ethical considerations.

| Feature | Seed1.5-VL | Existing Models |

|---|---|---|

| Cross-Modal Understanding | High adaptability in context handling | Basic language-visual mappings |

| Real-World Applications | Includes e-commerce, healthcare, and creative industries | Mainly focused on image classification and basic captioning |

| Context Sensitivity | Dynamic embedding spaces for deep reasoning | Fixed attention weights |

Potential Use Cases for Seed1.5-VL Across Different Industries

The introduction of Seed1.5-VL heralds exciting possibilities for various industries through its advanced multimodal understanding capabilities. In the realm of healthcare, imagine AI systems interpreting a patient’s medical history by analyzing both textual data and related imaging diagnostics. With Seed1.5-VL, doctors could receive enriched insights directly integrated from multiple sources, leading to improved decision-making and tailored treatment plans. This parallels historical moments in tech where digital innovations vastly improved patient outcomes—think of the early days of telemedicine. The integration of text and imagery in patient records could further leverage on-chain data to maintain a secure, immutable patient history, paving the way for trust and transparency in healthcare services.

Beyond healthcare, the entertainment industry stands to gain tremendously from Seed1.5-VL’s multimodal capabilities. For instance, content creators could utilize this model to craft narratives that seamlessly blend text, video, and audio inputs, harnessing AI to predict audience engagement and tailor content dynamically. This enhances the personalization of viewer experiences, much like the early algorithmic recommendations that revolutionized streaming platforms. Additionally, real-time film editing could benefit from AI assistance in understanding and blending scenes intelligently based on narrative structure, character development, and visual aesthetics. Such innovations not only promise artistic evolution but also reflect a broader trend where AI plays a pivotal role in making creative processes more efficient and engaging.

| Industry | Potential Benefit |

|---|---|

| Healthcare | Improved patient diagnostics through integrated analysis of medical records and imaging |

| Entertainment | Enhanced content creation through seamless blending of multimedia elements |

| Education | Personalized learning experiences via understanding both text and visual content |

| Marketing | Targeted advertising based on comprehensive user engagement analysis |

Challenges in Developing and Implementing Vision-Language Models

The journey of developing and implementing vision-language models like Seed1.5-VL is paved with numerous challenges that extend beyond mere technical hurdles. One of the most significant obstacles is the complexity in data alignment. Vision-language models require vast datasets where images and their corresponding textual descriptions seamlessly intertwine. This necessitates not only extensive data collection but also the meticulous annotation of datasets to ensure accuracy in understanding context, nuances, and variability in human communication. While tools like crowd-sourcing can enhance the volume of data, they often introduce inconsistencies and biases, making it crucial for developers to prioritize data curation processes to maintain quality. Interestingly, my early experiments in training a language model on a dataset with non-standard annotations led to convoluted outputs, highlighting the necessity for precise data alignment to foster effective multimodal understanding.

Equally daunting is the task of scalable model training. As models grow more sophisticated, they demand increased computational resources, which can present budgetary strains even for tech giants like ByteDance. Imagine trying to power a small village with a single wind turbine — the inadequacy of resources can limit your potential dramatically. Thus, leveraging distributed computing architectures and considering advanced training paradigms like transfer learning become vital strategies to overcome such limitations. Moreover, the challenge is not just technical; there are broader implications in the realms of ethics and governance. As we develop these models, the risk of perpetuating biases against marginalized groups becomes pronounced. This ethical landscape necessitates that the developers don’t merely assemble powerful tools but also engage in ongoing reflection and inclusive practices. A notable example in AI development history is the backlash surrounding biased facial recognition algorithms, which serves as a reminder that great power demands greater responsibility.

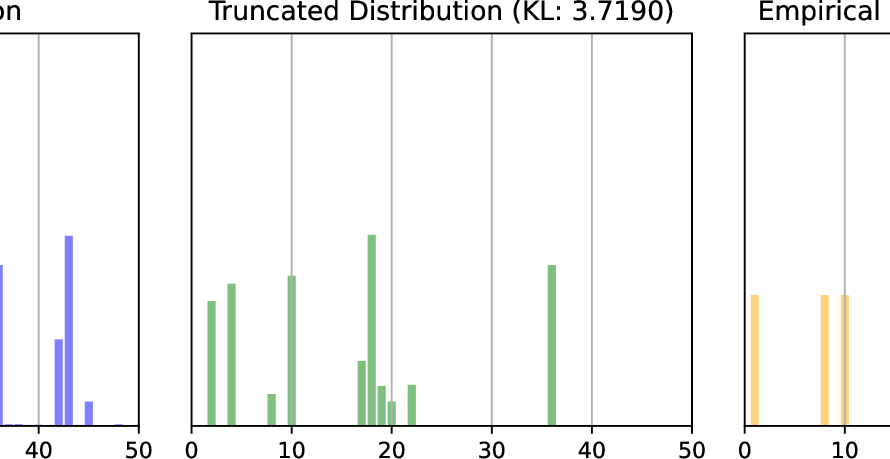

Evaluation Metrics for Assessing Multimodal Understanding and Reasoning

To objectively assess the capabilities of a multimodal model like Seed1.5-VL, it’s crucial to consider a suite of evaluation metrics that capture both language comprehension and visual inference. Typical metrics include accuracy, F1 score, and BLEU score for language-related tasks, while metrics such as mean Average Precision (mAP) and IoU (Intersection over Union) pertain to visual tasks. A holistic evaluation provides insights into how well the model integrates these modalities. For instance, in tasks like image captioning, a model must not only describe the image accurately but also incorporate contextual elements from linguistic cues, making a simple accuracy score inadequate.

Moreover, it’s fascinating to observe the trend of increasingly intricate benchmark datasets designed specifically for multimodal evaluation. These datasets often feature annotations that extend beyond basic labeling—think relational descriptions or complex scene interpretations that require an understanding of both visual and textual components. Emerging methods such as Multimodal Semantic Alignment and Context-Aware Reasoning are becoming more prevalent as they address the shortcomings of traditional assessments. It’s as if we’re creating a nuanced palate for flavors we’ve only just begun to explore. Drawing from my experience in this niche, I find that engaging with challenges such as explainability and fairness during evaluation not only enhances the robustness of these models but also builds trust within the applicable industries—be it healthcare, automated content generation, or virtual assistants—where a misinterpretation could have real-world consequences.

| Metric | Purpose | Importance |

|---|---|---|

| Accuracy | Measures correct predictions | Basic benchmark for performance |

| F1 Score | Balance between precision and recall | Crucial for imbalanced datasets |

| mAP | Evaluates object detection effectiveness | Standard in visual tasks |

| BLEU Score | Measures the quality of generated text | Useful in NLP applications |

Incorporating these comprehensive evaluation metrics not only sheds light on the model’s potential but also aligns with the broader discourse on ethical AI and responsible tech developments. As such, audiences—be they developers, ethics boards, or everyday users—gain a clearer perspective on how multimodal models might shape our understanding and interaction with AI in practical scenarios. In a world increasingly dictated by the seamless interplay of text and images—think your social media feeds or e-commerce platforms—the need for reliable and interpretable assessment becomes paramount, ensuring that advancements in AI like Seed1.5-VL not only exceed expectations but also do so effectively and responsibly.

Recommendations for Integrating Seed1.5-VL into Existing AI Infrastructure

Integrating Seed1.5-VL effectively into your existing AI infrastructure can be pivotal in amplifying multimodal capabilities across various applications. First, I recommend conducting a thorough audit of your current models and workflows. Identify potential synergy points where Seed1.5-VL can enhance tasks like image captioning or video analysis through its advanced vision-language capabilities. For instance, in my previous project focusing on marketing insights, introducing a multimodal model significantly improved our content creation efficiency, allowing the AI to generate tailored images and text simultaneously, thus fostering a more engaging consumer experience.

Next, consider a phased implementation strategy. Start with a pilot project in a controlled environment before rolling it out across wider applications. It’s essential to align Seed1.5-VL’s functionalities with specific business objectives, such as improving user interaction or automating content generation processes. You might leverage API integration to facilitate smooth communication between existing models and Seed1.5-VL, ensuring a seamless transition without overhauling your tech stack. As I experienced during my transition to incorporating large language models, phased integration minimizes disruption and allows for iterative feedback. Additionally, don’t overlook the importance of community and ongoing support; connecting with user groups or forums can yield valuable insights and best practices tailored to real-world challenges.

| Integration Steps | Example Use Case |

|---|---|

| Conduct Infrastructure Audit | Identify existing workflows for image/text generation. |

| Phased Implementation | Start with a pilot project in targeted department. |

| Facilitate API Integration | Ensure models can communicate without disruption. |

| Connect with User Communities | Gain insights from peers in the AI field. |

The Importance of Multimodal Training Datasets in Enhancing Model Performance

In the rapidly evolving landscape of artificial intelligence, the integration of multimodal training datasets is not just an enhancement—it’s a necessity. Thanks to recent advancements like Seed1.5-VL, we witness a clear delineation between traditional models that rely heavily on single modalities and those that embrace a richer, more integrated approach. Multimodal models, like Seed1.5-VL, tap into varied data forms—text, images, videos, and even audio—working in concert to forge a deeper understanding of context and meaning. Imagine an AI that doesn’t simply read a recipe but also understands the process through video tutorials and ingredient photographs, drastically improving its ability to provide meaningful interaction. The interplay between different modalities allows models to build nuanced representations and engage in intricate reasoning—think of an artist interpreting a song through visuals or a chef infusing creativity into a traditional dish by cross-referencing global culinary techniques.

Pursuing this holistic approach, however, demands not only sophisticated algorithms but also carefully curated datasets that represent diverse scenarios and perspectives. The implications stretch beyond the lab; sectors like healthcare, education, and entertainment are poised for transformation. For instance, in healthcare, a multimodal AI capable of analyzing patient history documents alongside medical imaging can uncover patterns that a unidimensional approach would overlook. Consider these key impacts of multimodal training datasets:

| Impact Area | Multimodal Advantage |

|---|---|

| Healthcare | Enhanced diagnostic accuracy through integrated imaging and clinical data. |

| Education | Personalized learning experiences by assessing text comprehension alongside visual content. |

| Entertainment | Innovative content recommendations leveraging user preferences across multiple media types. |

This interconnected framework showcases not just how AI evolves but also how it begins to engage with the multifaceted nature of human experience. As we navigate these advances, the emphasis on multimodal training datasets signifies a shift in perspective—recognizing that true intelligence mimics our own: a seamless blend of sight, sound, and text, rooted in shared human experience.

Future Directions for Vision-Language Research Beyond Seed1.5-VL

As we stand at the forefront of the next wave of Vision-Language research, it’s crucial to understand that the introduction of models like Seed1. is just the beginning of a much larger conversation in artificial intelligence. The evolution of multimodal understanding and reasoning is poised to impact several sectors, including education, healthcare, and creative industries. Imagine a future where a healthcare AI can read medical literature and patient records simultaneously to provide a tailored treatment plan. The implications are vast and touch on ethical considerations, transparency, and data privacy. We may also see models that not only understand language but can interpret cultural nuances, tapping into different knowledge bases to enhance human-AI collaboration.

Going forward, researchers will need to prioritize the seamless integration of multimodal systems into various applications. This involves pushing the boundaries of model training and incorporating more complex real-world data that draws from broader sources. Strategies to consider include:

- Interdisciplinary Collaboration: Pooling insights from linguistics, cognitive science, and visual aesthetics to create more nuanced models.

- Robust Feedback Loops: Implementing user-driven feedback systems to refine model responses based on real-world interaction.

- Ethical Frameworks: Developing standards to address biases in data and ensure equitable use of AI technologies.

Looking beyond Seed1., the future landscape is likely to involve enhanced versions of these models that can handle not just static images and text, but dynamic content, such as video feeds or live interactions. For example, imagine an AI that can simultaneously comprehend and interact with audiences during a livestream educational session, adapting its responses based on real-time feedback. Additionally, as sentiment analysis becomes increasingly sophisticated, we could see AI systems that can detect emotional tones across multiple modalities—text, speech, and visuals—allowing for deeper human connections in virtual spaces. It illustrates the profound ability of AI not just to augment existing technologies but to revolutionize interpersonal engagements across industries.

Ethical Considerations in the Deployment of Advanced AI Models

The introduction of Seed1.5-VL marks a pivotal moment not only for ByteDance but for the larger landscape of AI deployment, especially in the realm of multimodal technology. As these advanced AI models gain traction, it’s crucial to take a step back to consider the ethical implications behind their deployment. At their core, these models require vast amounts of data, much of which can include sensitive or personal information. This raises significant questions regarding data privacy, informed consent, and potential biases ingrained in the learning algorithms. For instance, AI systems that integrate vision and language may inadvertently propagate existing societal biases if not meticulously curated. Building frameworks to ensure fair representation in training datasets isn’t just good practice; it’s essential for fostering public trust and ensuring equitable outcomes in AI’s efficacy across different demographics.

Real-world examples emphasize the urgency of addressing these ethical considerations. Take, for example, the fallout from biased facial recognition technologies. Incidents where AI models significantly misidentified individuals from underrepresented racial groups underscored the dangers—manifesting not only as reputational damage for tech companies but also as a potential infringement on civil liberties and rights. As Seed1.5-VL navigates the intricate balances of understanding and reasoning across modalities, it bears the responsibility of setting a precedent for ethical AI development. Thought leaders in the space, like Sam Altman, have voiced the importance of establishing an ethical framework alongside technological advancements. Moving forward, it’s imperative that stakeholders, including developers, policymakers, and the general public, engage in thorough discussions about how to appropriately oversee and implement these technologies, ensuring that innovation does not come at the cost of societal well-being.

User Perspectives and Community Feedback on Seed1.5-VL

The introduction of Seed1. has sparked vibrant discussions across AI and machine learning communities. Users have quickly pointed out several key features that distinguish this model from its predecessors. Among them is its multimodal understanding capability, which allows it to seamlessly interpret and blend visual and textual data. This means, for instance, that it can analyze a chart and a related dashboard narrative simultaneously, providing insights that are more robust and nuanced than what we’ve seen before. This is crucial for sectors like healthcare, where understanding complex medical data often requires integrating visual information, such as scans or diagnostic images, with clinical notes. In my experience, having a model that excels in such integration can elevate diagnostic processes and enhance patient outcomes significantly.

Community feedback has also highlighted the importance of ethical considerations and data transparency surrounding Seed1.’s development. Many users have expressed concerns regarding biases that may persist within large language models, particularly when it comes to sensitive applications like predictive policing or credit scoring. In a recent forum discussion, a prominent AI ethicist suggested that “advanced models should not only excel in performance but also uphold ethical standards.” This sentiment resonates across various sectors, prompting a call for exhaustive testing before implementation in real-world scenarios. The potential for seed-based visual reasoning to influence sectors like finance or entertainment hinges not just on capability but also on trust—how can we be assured that these models operate fairly? As AI technology like Seed1. continues to push boundaries, it is essential that stakeholders address these critical issues to cultivate public trust and enhance the adoption of AI across multiple domains.

Conclusion: The Impact of Seed1.5-VL on the Future of Multimodal AI

The unveiling of Seed1.5-VL marks a significant milestone in the evolution of multimodal AI, likely reshaping how we understand the interplay between language and visual data. This model’s architecture facilitates enhanced contextual comprehension, enabling vehicles like chatbots and virtual assistants to grasp nuanced queries better than ever before. Imagine asking a virtual assistant to not only identify objects in a photo but also to relate them to a story or event you experienced. It’s akin to having a conversation with a well-read friend who can connect disparate pieces of information seamlessly. This capability is poised to enrich sectors like education and entertainment, where content can be both delivered and personalized in ways previously thought impossible.

To emphasize the broader implications, consider the potential impact on industries reliant on rich media, such as marketing and analytics. For instance, real-time insights from Seed1.5-VL could lead to more targeted advertisements and consumer engagement strategies, evolving into an era where marketing is no longer a push but rather a conversation. Furthermore, as AI models become more adept at understanding both visual and textual nuances, sectors like healthcare could see revolutionized patient interactions. Imagine diagnostic systems that analyze both patient-written descriptions and medical imaging, enabling streamlined, more effective care. By bridging gaps across various domains, Seed1.5-VL symbolizes not just a singular advancement in AI technology, but a foundational shift that beckons a new era of interconnected understanding and reasoning.

Q&A

Q&A on ByteDance’s Seed1.5-VL: A Vision-Language Foundation Model

Q1: What is Seed1.5-VL?

A1: Seed1.5-VL is a vision-language foundation model developed by ByteDance. It is designed to enhance general-purpose multimodal understanding and reasoning, integrating both visual and textual data for improved performance in various tasks.

Q2: What are the key features of Seed1.5-VL?

A2: Seed1.5-VL exhibits several key features, including advanced capabilities for multimodal understanding, the ability to process and reason with both images and text, and robust performance across a variety of applications such as image captioning, visual question answering, and cross-modal retrieval.

Q3: How does Seed1.5-VL differ from its predecessors?

A3: Seed1.5-VL builds upon previous models by incorporating enhanced architectures and training methodologies that allow for more effective integration of visual and textual information. This results in improved reasoning capabilities and greater overall performance in multimodal tasks.

Q4: What potential applications does Seed1.5-VL have?

A4: Potential applications for Seed1.5-VL include but are not limited to, content creation, search and recommendation systems, educational tools, augmented reality, and customer support through understanding and generating responses that involve both visual and textual components.

Q5: What industries could benefit from Seed1.5-VL?

A5: Industries that could benefit from Seed1.5-VL include technology, e-commerce, education, entertainment, and any field that relies on the integration of visual data and language, such as marketing and social media.

Q6: Has ByteDance provided any information about the performance metrics of Seed1.5-VL?

A6: While specific performance metrics have not been disclosed in detail, ByteDance has reported that Seed1.5-VL demonstrates competitive results on benchmark tasks for vision-language models, suggesting a significant advancement over prior models in the same category.

Q7: Is Seed1.5-VL available for public use?

A7: At this time, details regarding public availability, licensing, or accessibility of Seed1.5-VL have not been explicitly stated by ByteDance. Further announcements may clarify its intended use and distribution.

Q8: What are the implications of developing models like Seed1.5-VL for the future of AI?

A8: The development of models like Seed1.5-VL could significantly advance the field of artificial intelligence by enhancing the ability of machines to understand and reason in a manner that closely resembles human cognition. This could lead to more intuitive human-computer interactions and more effective AI applications across various domains.

Insights and Conclusions

In conclusion, ByteDance’s introduction of Seed1.5-VL marks a significant advancement in the field of multimodal understanding and reasoning. By leveraging the capabilities of a vision-language foundation model, Seed1.5-VL aims to enhance the integration of visual and textual data, thereby facilitating more sophisticated interactions between users and AI systems. As the demand for general-purpose AI solutions continues to grow, developments such as this highlight the ongoing efforts to bridge the gap between different modes of information processing. The potential applications of Seed1.5-VL across various industries may pave the way for innovative solutions that enhance user experience, improve accessibility, and drive efficiency. As research in this area progresses, it will be essential to monitor the impacts and ethical considerations associated with the deployment of such advanced models.