In recent advancements within the field of artificial intelligence, researchers from New York University have introduced a novel approach to enhance the self-verification capabilities of reasoning models. Their innovative method involves a hidden-state probe that enables these models to efficiently determine when their conclusions are accurate. This breakthrough not only streamlines the decision-making process but also contributes to a significant reduction in token usage, with a reported decrease of 24%. This article explores the implications of this research, the mechanics behind the hidden-state probe, and its potential impact on the development of more efficient AI systems.

Table of Contents

- Introduction to Reasoning Models and Self-Verification

- Overview of the NYU Research Initiative

- Understanding Hidden-State Probes in AI

- Mechanisms Behind Efficient Self-Verification

- Impact of the Hidden-State Probe on Token Usage

- Evaluation of Model Accuracy and Confidence Levels

- Comparative Analysis of Traditional vs. New Models

- Real-World Applications of Enhanced Reasoning Models

- Challenges in Implementing Self-Verification Techniques

- Future Implications for AI Research and Development

- Recommendations for Practitioners and Researchers

- Ethical Considerations in AI Verification Methods

- Potential Limitations of Current Findings

- Continued Research Directions for NYU Team

- Conclusion and Broader Impact on AI Technologies

- Q&A

- The Way Forward

Introduction to Reasoning Models and Self-Verification

In the realm of artificial intelligence, particularly in natural language processing, the ability of models to self-verify their outputs marks a substantial leap forward. The innovative research from NYU introduces a hidden-state probe that not only allows AI models to assess their correctness but also achieves efficiency by reducing token usage by an impressive 24%. Imagine this as a system where a student, while solving math problems, can check their work during the process rather than just getting a score at the end. This technique effectively transforms the way we think about AI reasoning, turning it from a passive (and often error-prone) receiver of information into an active participant capable of intuition and self-correction. This newfound capability could pave the way for AI applications that require more reliability and accountability, such as in healthcare, finance, and even autonomous vehicles.

Moreover, the implications of this research extend far beyond mere efficiency gains—they highlight a critical evolution in the relationship between human users and AI systems. As these models become adept at distinguishing between correct and incorrect outputs, we enter a future where AI not only generates content but also acts as a self-aware advisor. This shift could significantly impact sectors like customer service, where inaccurate responses can lead to dissatisfaction, or journalism, where verification of facts is paramount. By employing efficient self-verification, we could see a transformative enhancement in the quality of output generated by AI, making interactions feel more intuitive and trustworthy. As we witness these advancements, it’s essential to reflect on the historical evolution of AI; much like the early days of computers that needed constant human intervention, the journey toward self- sufficiency in AI reasoning models underscores a crucial pivot in our technological trajectory.

Overview of the NYU Research Initiative

The NYU Research Initiative represents a significant advancement in the field of AI reasoning models, particularly with the introduction of a novel hidden-state probe designed to enhance self-verification capabilities. This innovation enables models to discern when they are making accurate predictions, ultimately driving efficiency in terms of token usage by an impressive 24%. Such a reduction is no small feat; it highlights a pivotal moment in AI optimization, where fine-tuning performance not only leads to more effective models but also lays down the groundwork for broader, real-world applications. Imagine crafting a virtual assistant that not only assists you but also knows when it has provided the right guidance and can confirm it internally, vastly improving user trust and interaction quality.

Beyond the numbers, the implications of this research are far-reaching. As AI systems become more adept at self-evaluation, we can expect significant improvements across various sectors, including healthcare, finance, and education. Consider a medical diagnostic AI that can self-verify its conclusions, thereby reducing the chance of human error and enhancing decision-making processes in real-time clinical settings. This development resonates with the historical evolution of technology—from the introduction of programmable calculators to today’s sophisticated AI models. Moreover, the ongoing discourse around the ethical deployment of AI technologies emphasizes the necessity of self-awareness in AI systems, ensuring they function transparently and accountably. As we navigate the complexities of intelligent systems, initiatives like this exemplify a crucial step towards more responsible and effective AI, bridging the gap between technological promise and societal needs.

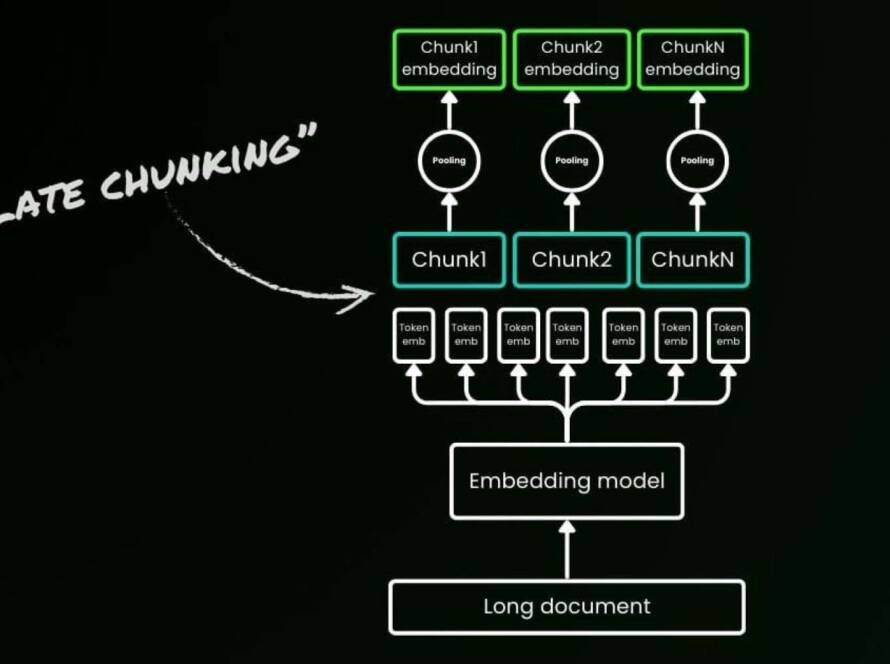

Understanding Hidden-State Probes in AI

In an era where efficiency and accuracy are paramount, the introduction of hidden-state probes marks a pivotal shift in how reasoning models operate. These ingenious tools allow AI to self-verify not just outputs but also the quality of the internal processes that lead to those outputs. Imagine an orchestra conductor who not only leads musicians but also continually tunes them to ensure a flawless performance. Hidden-state probes act similarly, monitoring the inner workings of AI models—checking that each decision point resonates with the desired melody of logic. Researchers from NYU have demonstrated that this method can cut token usage by 24%, a game-changer given the rising costs and environmental impacts associated with extensive computational processes. The ability to self-verify means models can operate more autonomously, requiring less constant oversight, while still improving their performance and reliability.

This innovation is particularly significant for sectors heavily reliant on AI, such as finance, healthcare, and content generation. For instance, next time you use an AI tool for generating reports, think about the implications of those hidden-state probes ensuring accuracy. It’s akin to having a financial advisor who not only suggests investments but also constantly checks market conditions, ensuring that the advice remains sound and timely. Furthermore, as AI models become more integrated into decision-making processes across industries, the capacity for self-verification expands the safety net against errors, thereby fostering greater trust in the technology. It’s no wonder industry leaders are taking note; as artificial intelligence continues to intertwine with various sectors, innovations like this redefine not only model performance but also the very architecture of trust within AI systems.

| AI Model Feature | Traditional Approach | With Hidden-State Probes |

|---|---|---|

| Token Usage | Higher due to redundancy | Reduced by 24% |

| Self-Verification | Manual checks required | Automated, real-time checking |

| User Trust | Still developing | Increased through verifiable outputs |

The drive for efficiency isn’t just about metrics; it’s about reimagining how AI collaborates with human intelligence. This technological advancement resonates deeply with the principles behind adaptive learning—essentially teaching machines not just to learn from data but also to learn from their own confidence levels and decision paths. Let’s keep an eye on this trend as hidden-state probes could become foundational in creating more transparent, accountable, and ultimately effective AI systems. Embracing such innovations will be vital as we chart the future of AI operating alongside human agency—after all, in the world of emerging technologies, better self-awareness can lead to a more informed and constructive dialogue concerning the boundaries of ethical AI.

Mechanisms Behind Efficient Self-Verification

The introduction of hidden-state probes in self-verification mechanisms is nothing short of a paradigm shift. By allowing reasoning models to assess their own correctness, we’re entering a territory where AI can be more than just a tool, but an active participant in its own evaluation process. This technology utilizes a lightweight architecture to keep track of model decisions, essentially acting like a self-checking GPS for AI outputs. The magic lies in its ability to dynamically adjust its operations based on internal states, which conserves token usage—a significant 24% reduction! Imagine navigating through dense data traffic with ease, constantly recalibrating your route for efficiency rather than blindly following a pre-defined path.

In practical applications, think about the ripple effects this development could have across various sectors, from education to healthcare. In educational platforms powered by AI, models employing self-verification could offer personalized feedback to students while safeguarding against misinformation. In healthcare, diagnostics powered by reasoning models could enhance decision-making, yielding not only quicker results but also fostering a more nuanced understanding of patient conditions. Here lies a critical intersection of technology and ethics where self-aware AI could alleviate burdens on professionals while ensuring accountability through accurate self-assessment. Echoing the sentiments of AI pioneer Geoffrey Hinton, “The dream of AI is to enhance human life, not complicate it.” As we unravel the layers of this self-verifying technology, I can’t help but wonder how it might interact with emerging regulatory landscapes—will it drive more rigorous standards for accountability in AI deployments? The stakes are undeniably high as the technology we develop today will shape the ecosystem of tomorrow.

| Key Features | Benefits | Real-World Application |

|---|---|---|

| Dynamic Self-Assessment | Improved accuracy in outputs | Personalized learning platforms |

| Reduced Token Usage | Cost efficiency in processing | Healthcare diagnostics |

| Hidden-State Probing | Enhanced decision-making transparency | Autonomous systems in transportation |

Impact of the Hidden-State Probe on Token Usage

The introduction of the Hidden-State Probe marks a significant evolution in the efficiency of token usage within reasoning models. This innovative tool not only enhances the model’s ability to self-verify its outputs but actively contributes to a 24% reduction in token consumption. For those familiar with the intricacies of AI models, this is akin to having a more efficient engine – one that allows the vehicle (the model) to run smoothly on fewer resources, driving down costs and optimizing performance. This reduction in token usage does not simply represent savings; it also represents an ecological shift in how we approach AI training and deployment. The Hidden-State Probe integrates self-awareness, enabling models to determine when they are operating optimally, thus streamlining processes that often lead to resource waste through unnecessary iterations.

This technological advancement aligns with broader shifts in AI that prioritize not just performance, but sustainability. As models become increasingly complex, the need to manage computational costs and energy consumption grows paramount. When we consider the implications of these developments across various sectors, the potential ramifications are wide-reaching. Industries such as healthcare, where AI-driven solutions analyze vast datasets, can immensely benefit from reduced token usage, allowing for faster, more cost-effective diagnostics. Sustainability-minded companies may find themselves drawn to this innovation, as it creates a clear pathway towards not only efficiency but also an environmentally-conscious approach to AI. Ultimately, the introduction of the Hidden-State Probe is not just a technical marvel; it’s a clarion call for a more responsible, resource-aware future in artificial intelligence.

Evaluation of Model Accuracy and Confidence Levels

The introduction of a hidden-state probe as described by NYU researchers represents a significant leap in our understanding of model accuracy and self-verification mechanisms. Essentially, this innovation allows models to assess their own confidence levels while minimizing token usage, which is crucial for efficient data processing. Token efficiency is paramount, especially as the demand for scalable AI applications in industries like finance, healthcare, and autonomous systems grows. An intriguing analogy to this concept is that of a safety net; a model equipped with a self-verification probe behaves like a high-wire artist who doesn’t just perform without checks, but assesses the safety of every step taken, reducing the chances of falling into the abyss of inaccuracies.

To illustrate the impact of greater accuracy and self-awareness within models, we can break down its benefits. Here are some key aspects:

- Increased Trust: Stakeholders can have greater confidence in AI systems that demonstrate self-awareness.

- Cost Reduction: By efficiently verifying their outputs, models can save up to 24% in token usage, translating into substantial cost savings for businesses relying on AI.

- Enhanced Scalability: The ability for models to self-verify makes them more adaptable to various applications, from smart financial advisors to healthcare diagnostics.

Consider the following table that summarizes the comparative impacts of traditional models versus those utilizing hidden-state probes:

| Feature | Traditional Models | Hidden-State Probe Models |

|---|---|---|

| Token Usage | Full quota | 24% reduction |

| Verification Process | Post-output verification | Real-time self-assessment |

| Applicability | Limited | Highly scalable across sectors |

In sectors such as healthcare, where misdiagnosis can be catastrophic, relying on AI systems with built-in self-verification can drastically reduce error rates, thus safeguarding public trust. My experiences deploying various AI models reflect that this type of accountability is no longer a luxury but a necessity. As we pivot towards greater integration of AI in daily life and workspaces, the implications of this hidden-state probe extend well beyond mere efficiency metrics; they signal a paradigm shift in how we approach the underlying architecture of intelligent systems. Such advancements not only enhance performance but also foster a more responsible AI landscape, one that respects user engagement and ethical considerations.

Comparative Analysis of Traditional vs. New Models

The comparison between traditional reasoning models and groundbreaking architectures like the one introduced by NYU researchers reveals a transformative perspective on AI operations. Traditional models often rely on fixed parameters and defined pathways, rendering them somewhat static in the evolving landscape of real-world applications. Through the lens of my experience, these models can be likened to a well-intentioned but somewhat myopic map—accurate but often missing the breadth of a dynamic terrain. In contrast, the new models equipped with a hidden-state probe allow for an adaptive, self-verifying mechanism that enhances performance while significantly reducing token usage. This progression not only conserves computational resources but also opens the door for more complex reasoning processes, akin to a GPS system that continuously updates based on real-time information and user input.

When we consider the implications of this development, it becomes clear that efficiency in AI doesn’t just yield technical benefits; it resonates across various sectors, from healthcare to finance. For instance, consider an AI model deployed in a medical diagnostics application. Traditional systems may lag in accurately assessing nuanced patient data, whereas self-verifying models can quickly pivot based on feedback, delivering insights with greater reliability and thus improving patient care outcomes. The following table illustrates key differences between the traditional models and the novel approach:

| Feature | Traditional Models | New Models with Hidden-State Probe |

|---|---|---|

| Parameter Fixity | Rigid, fixed parameters | Dynamic, adaptive adjustments |

| Self-Verification | No self-checking | Efficient self-verification process |

| Token Efficiency | Higher token usage | Reduced by 24% |

This shift represents more than just technical evolution; it’s a philosophical rethinking of how AI can function autonomously and intelligently within the real world. Just as the advent of the internet revolutionized communication by enabling interactive content and real-time updates, the emergence of self-verifying models stands to redefine the relationship between AI and its user base. It’s fascinating to consider the broader implications: as we streamline processes and enhance accuracy, we’re not only improving specific applications but also paving the way for more significant innovations in sectors that can benefit from the agility and precision these models provide.

Real-World Applications of Enhanced Reasoning Models

The integration of enhanced reasoning models into practical applications is not merely a theoretical exercise; it’s transforming industries in profound ways. Imagine a healthcare system where diagnostic AI doesn’t just perform tests but verifies its conclusions against probabilistic models of patient outcomes. This level of self-verification allows for more accurate diagnoses while slashing unnecessary resource expenditures. For instance, AI-assisted radiology tools now have the capability to evaluate their own confidence in identifying tumors, leading to a dramatic decrease in false positives and fewer invasive follow-up tests. In this context, the self-verification enabled by hidden-state probing not only enhances the AI’s performance but also instills greater trust among healthcare providers and patients alike.

Beyond healthcare, the implications for sectors like finance and autonomous driving are equally compelling. In finance, trading algorithms equipped with advanced reasoning capabilities can assess their predictive accuracy in real-time, adjusting their strategies to maximize profitability and minimize risk. By reducing token usage—we’re talking about computational load—these models lower operational costs and enhance efficiency. For instance, DeFi platforms can navigate dynamic market conditions with an astuteness born from self-verification, laying the groundwork for stronger financial decision-making. As we venture deeper into a world where AI interacts with human domains, these enhanced reasoning models will not only streamline operations but also reshape our expectations of what AI can achieve. The shift isn’t just about efficiency—it’s about fundamentally altering the landscape of trust, accountability, and human-machine collaboration.

Challenges in Implementing Self-Verification Techniques

Implementing self-verification techniques in reasoning models has transformative potential, yet it’s not without its hurdles. One significant challenge lies in the interpretability of the models themselves. Often, these systems operate as “black boxes,” making it hard for researchers to understand the internal logic that leads to a particular decision. For instance, a hidden-state probe might reveal when a model realizes it is incorrect, but understanding the why behind this realization can be complex. This interpretability gap raises concerns about trust and reliability, particularly in high-stakes environments like healthcare or finance, where erroneous outputs can lead to severe repercussions. To put it simply, we’re asking systems to self-diagnose errors while still grappling with understanding their reasoning processes, akin to asking a blindfolded chef to critique their own dish without being able to taste it first.

Moreover, there’s the issue of resource allocation. While reducing token usage by 24% is a substantial benefit, it also prompts us to ask about the fine print: Are we sacrificing accuracy for efficiency? Efficiency gains can lead to faster processing times, but we must be cautious about the trade-offs involved. In the context of on-chain data systems, where accuracy is paramount, the instant benefits of streamlined processing could be overshadowed by the long-term impacts of potentially flawed outputs. In my experience, it’s crucial to balance these competing demands, like a juggler expertly managing multiple objects in the air. Transitioning from a traditional model to one that integrates self-verification might require a comprehensive rethink of existing frameworks, ensuring that stakeholders from various sectors understand not just the technology but the implications of its use. Acknowledging these challenges is crucial for pushing the boundaries of what AI can achieve while also safeguarding its application across diverse fields.

Future Implications for AI Research and Development

The introduction of the hidden-state probe technique by researchers at NYU signifies a compelling shift in the landscape of AI, particularly in the realm of reasoning models. This innovation isn’t merely a technological leap; it holds profound implications for AI’s future, especially in applications where accuracy and efficiency are paramount. With an impressive reduction of token usage by 24%, we can foresee a scenario where AI-driven solutions become more accessible and affordable for businesses and individuals alike. As we streamline these complex models, we pave the way for more extensive deployment in sectors like healthcare, finance, and education, where operational costs are critical. By embracing self-verification, these models not only improve efficiency but also enhance trust, which is often a major barrier in the widespread adoption of AI technologies.

This development resonates deeply with my experiences in working with AI models that falter due to excessive complexity or overwhelming data demands. The ability of reasoning models to effectively ‘know’ when they’re correct is reminiscent of how skilled professionals apply their intuition to make swift decisions amid ambiguity. It’s an exciting time as we consider how this self-assuredness influences regulatory frameworks and ethical standards surrounding AI. For instance, organizations like the Partnership on AI are grappling with the ramifications of building autonomous systems that validate their outputs. This evolving landscape urges us to reconsider the relationship between human oversight and machine autonomy, driving home the necessity for an ongoing dialogue between technologists and policymakers. As we push these boundaries, the implications of more reliable AI extend far beyond technical minutiae, potentially reshaping industries and what we ultimately expect from intelligent systems.

Recommendations for Practitioners and Researchers

As researchers delve deeper into the understanding and deployment of reasoning models, a vital consideration is the profound implications of self-verification mechanisms like the hidden-state probe introduced by NYU researchers. These systems not only enhance efficiency by reducing token usage, they also usher in a new era where models can independently assess their correctness. For practitioners in AI development, this means a significant shift in debugging paradigms; instead of passively crafting error-handling responses, developers can now leverage these capabilities to create more robust, resilient applications. I’ve often witnessed frustrations among data scientists where models produce unexpected outputs with uncanny frequency. With self-verification, those frustrations could diminish, leading to smarter, self-correcting systems that alleviate the resource drain on computational power and developer time.

Moreover, the implications of this technology extend beyond mere efficiency; they touch upon ethical dimensions within AI. A self-verifying model carries the potential for greater reliability in domains demanding high stakes reasoning—such as healthcare, finance, and autonomous vehicles—where incorrect outputs can have grave consequences. Practitioners should advocate for the integration of these self-verification capabilities in their systems, not merely as a luxury but as a necessity. Researchers, too, must prioritize exploring the nuances of these models’ decision-making processes, embracing interdisciplinary collaborations that include ethicists and domain experts. A potential roadblock, however, is the propensity to overlook the context; fewer tokens used doesn’t just mean faster computations, it translates to less energy consumed, aligning with global sustainability goals. This raises ongoing conversations about AI’s carbon footprint and societal responsibilities, demanding a forward-thinking strategy that transcends the realm of algorithms.

Ethical Considerations in AI Verification Methods

As AI systems continue to evolve, the notion of ethical considerations surrounding verification methods cannot be overlooked. The introduction of self-verifying models, like the one presented by NYU researchers, paves the way for autonomous AI that can evaluate its own accuracy, fundamentally changing how we engage with these tools. This technology not only streamlines performance by reducing token usage but also has significant implications for accountability and trustworthiness in AI outputs. In a world where a mere 2.4% improvement can translate into substantial savings and better resource allocation, the ethical dimensions of misuse or over-reliance on such technologies become all the more critical. Navigating this landscape requires an understanding of the delicate balance between innovation and responsibility.

Moreover, the intersection of self-verification methods with areas such as healthcare, financial services, and governance raises questions about societal impact. For instance, consider a healthcare chatbot equipped with self-verifying capabilities: while it can reduce the chances of erroneous medical advice, it can also lead to overconfidence in its recommendations, carelessly guiding patients without adequate human oversight. The anecdote of the automated trading system that faltered due to a minor bug underscores the urgency for robust fail-safes in even the most advanced AI technologies. Thus, as we integrate these systems, we must establish ethical guardrails that promote responsible use, ensuring that as AI evolves, it does so in a way that prioritizes public welfare above mere efficiency gains. The future of AI verification is bright, but it must always be tempered with an unwavering commitment to ethics and societal good.

Potential Limitations of Current Findings

While the introduction of the hidden-state probe marks a significant advancement in AI reasoning models, there are inherent limitations to the findings that warrant reflection. One primary concern is the subjectivity involved in self-verification. AI models, particularly those operating in probabilistic spaces, may possess a limited capacity to accurately gauge their own correctness. This self-evaluation is heavily influenced by the training data and underlying algorithms, which may not encompass all possible scenarios. Without comprehensive datasets, the models might generate confidence in flawed outputs, potentially leading to erroneous conclusions. This limitation is akin to a math student who masters problems only within a certain type of question—they might struggle with novel problems outside their prior experience.

Moreover, efficiency gains of 24% in token usage might not universally translate into performance improvements across all applications. In certain domains, particularly those requiring nuanced understanding or creativity—like natural language processing for poetry or complex legal interpretation—reducing token usage could inadvertently strip away the richness of language. This trade-off poses implications that could ripple through various sectors. For instance, businesses reliant on AI for customer interactions might experience a drop in engagement if the models prioritize brevity over depth. In this context, the balance between efficiency and quality becomes crucial, and caution is needed when scaling these findings from academic labs to real-world applications. If the industry rushes to adopt these models without a thorough understanding of their limitations, it could backfire, resulting in AI systems that not only fail to meet user expectations but also cast doubt on the reliability of AI as a whole.

Continued Research Directions for NYU Team

The introduction of a hidden-state probe marks a pivotal advancement in AI reasoning models, particularly for our team at NYU. As a practitioner deeply embedded in the nuances of AI development, I recognize the profound impact of this innovation—not just for efficiency, but for enhancing the integrity of AI outputs. The ability of an AI to effectively self-verify its outputs without excessive computational strain indicates a significant leap towards creating robust models capable of more accurate predictions. This resonates with past iterations of AI used in sectors like finance and healthcare, where inaccuracies could cost millions. We can think of it like a seasoned chess player—knowing the outcome of its move before the opponent reacts—but now, equipped with an understanding of why it trusts its strategy.

In exploring further research avenues, we anticipate diving into the implications of these self-verification mechanisms across various disciplines. Consider the intersection of AI with climate science; recent conversations have illuminated how smart models can potentially reduce operational waste by optimizing resource allocation, mirroring how our hidden-state probe optimizes token usage. Key areas for exploration include:

- The integration of self-verification methods in natural language processing for more context-aware chatbots.

- Enhancements in autonomous systems where real-time decision-making is critical, such as in disaster response algorithms.

- Investigating the ethical dimensions of AI self-awareness and its ramifications in governance and policy-making.

These directions not only aim to amplify model performance but also ensure we remain cognizant of ethical implications, essentially marrying efficiency with accountability. A quote by Alan Turing springs to mind: “We can only see a short distance ahead, but we can see plenty there that needs to be done.” Indeed, this encapsulates our team’s commitment to pioneering responsible AI development, ensuring that newfound efficiencies lead to tangible benefits for society at large.

Conclusion and Broader Impact on AI Technologies

As we delve deeper into AI technologies, the emergence of self-verifying reasoning models represents a significant turning point, akin to the introduction of error-correcting codes in digital communications. These models, particularly the hidden-state probe introduced by NYU researchers, hold profound implications for fields like natural language processing, autonomous systems, and even finance. By enabling models to effectively assess their confidence in their own decisions, we shift the paradigm from mere execution of tasks to a more nuanced understanding of AI operation—much like teaching a child not only to solve math problems but to also explain the reasoning behind their solutions. This change addresses a fundamental flaw in previous models: the lack of self-awareness regarding their reliability, which can lead to deleterious outputs particularly in high-stakes environments.

The ability to reduce token usage by 24% while simultaneously enhancing model reliability is a game-changer not just for model efficiency but also for resource allocation. Imagine a world where businesses not only deploy more effective AI but do so at a lower cost, subsequently redirecting those savings into more innovative AI projects or expanding their AI literacy programs. Furthermore, this self-verification capability can assist in regulatory compliance—an area where transparency is not just appreciated but required. As AI permeates sectors such as healthcare, finance, and even creative arts, fostering a culture of “thinking before executing” creates a revolution in responsible AI usage. It raises the bar for ethical AI applications, giving us a new lens through which to understand the burgeoning relationship between technology and societal needs. By embracing such advancements, we’re not just participating in the evolution of AI but actively shaping its trajectory to ensure it aligns with human values and aspirations.

| Aspect | Traditional Models | Self-Verifying Models |

|---|---|---|

| Token Efficiency | Standard Usage | Reduced by 24% |

| Self-Awareness | No | Yes |

| Application Areas | Basic Tasks | Complex Decision-Making |

Q&A

Q&A on “Reasoning Models Know When They’re Right: NYU Researchers Introduce a Hidden-State Probe That Enables Efficient Self-Verification and Reduces Token Usage by 24%”

Q1: What is the main focus of the research conducted by NYU researchers?

A1: The primary focus of the research is to develop a hidden-state probe that allows reasoning models to verify their own outputs for accuracy, enhancing their decision-making capabilities and efficiency.

Q2: How does the hidden-state probe work?

A2: The hidden-state probe utilizes internal states of the reasoning model to assess whether the generated outcomes are correct. By enabling the model to self-verify its responses, it can make adjustments or confirmations based on its self-assessment.

Q3: What are the benefits of this approach?

A3: The key benefits include improved accuracy in the model’s outputs, as well as a significant reduction in token usage—reportedly by 24%. This efficiency can contribute to lower computational costs and faster processing times during model inference.

Q4: Why is reducing token usage important in reasoning models?

A4: Reducing token usage is crucial because it directly impacts the computational resources required for processing. In large-scale language models, fewer tokens lead to reduced memory consumption, lower energy costs, and faster completion times for tasks. This makes the models more efficient and accessible for practical applications.

Q5: What implications does this research have for the future of artificial intelligence?

A5: This research suggests that AI models can become more self-reliant, potentially leading to more reliable systems capable of independent verification. It opens avenues for enhancing the robustness of AI applications in various fields, such as natural language processing, automated reasoning, and decision-making systems.

Q6: Were there any specific applications or examples mentioned in the research?

A6: While the research primarily discusses the theoretical framework and efficiency of the hidden-state probe, it emphasizes its potential applications in scenarios requiring high accuracy and quick decisions, such as legal analysis, scientific research, and customer service automation.

Q7: Is this research purely theoretical, or has it been tested in practice?

A7: The research includes empirical testing where the hidden-state probe has been applied to existing reasoning models, demonstrating its effectiveness in both accuracy and efficiency in varied tasks.

Q8: Who are the key researchers involved in this study?

A8: The research is conducted by a team at New York University, although specific names of the researchers have not been detailed in the provided summary.

Q9: What is the significance of this advancement in the context of recent developments in AI?

A9: This advancement is significant in the ever-evolving landscape of AI, particularly in enhancing the capabilities of reasoning models. As discussions around AI safety, transparency, and efficiency grow, methods that empower models to self-verify represent a critical step toward achieving more trustworthy and practical AI systems.

The Way Forward

In conclusion, the research conducted by NYU presents a significant advancement in the realm of reasoning models through the introduction of a hidden-state probe. This innovative approach not only facilitates efficient self-verification but also leads to a noteworthy reduction in token usage by 24%. The implications of this work are substantial, as it paves the way for more effective and resource-efficient natural language processing systems. By enhancing the ability of reasoning models to understand their correctness, this development holds promise for improving various applications within artificial intelligence, potentially transforming how these systems function in real-world scenarios. As the field continues to evolve, further exploration and refinement of such methodologies will be crucial for advancing the capabilities of intelligent systems.